NSF-REU Program 2022 at UMBC

Professional Development Activities

Invited Talks from UMBC Leaders

Participating External Activities Organized by UMBC Louis Stokes Alliances for Minority Participation (LSAMP) program

Weekly Individual Research Meeting and Training

SenseBox v2.0: A Robust Smart Home System for Activity Recognition and Behavioral Monitoring

REU student: Adam Golstein

Mentors: Indrajeet Ghosh, Dr. Nirmalya Roy

Abstract— In the past few years, internet-of-things, artificial intelligence, and pervasive computing technologies have contributed to the next generation of context-aware systems with robust interoperability to control and monitor the environmental variables of smart homes. Motivated by this, we propose SenseBox v2.0, a multifunctional automated smart home system for smart home control applications. In addition, SenseBox v2.0 provides a platform for human activity recognition (HAR) and behavioral monitoring. In SenseBox v2.0, we focus on tackling the following challenges: data fragmentation, time desynchronization, network complexity, and system fragility for real-world deployment. To address these challenges, SenseBox v2.0 will integrate Home Assistant (HA) to aggregate data on a local hub device, synchronizing with a server as needed. This is designed to make the system more easily interoperable with future sensor technologies due to broad community support for HA. We surveyed over 50 commercial off-the-shelf (COTS) sensor solutions and narrowed our selection down to one model of each of the following: a passive infrared (PIR) detection sensor, reed (door) sensor, object tag motion sensor, wearable wrist-attached accelerometer sensor, wearable wrist-mounted accelerometer sensor, and IP camera. The first three sensors use the Zigbee open standard for mesh networking, whereas the wearable sensor (the Empatica E4) uses Bluetooth Low Energy and the IP camera uses WiFi. Lastly, we assembled these devices into a system using HA on an x64-based hub due to the system requirements for the Empatica E4. In the future, we will evaluate our proposed system by computing data rate loss, network latency, battery life, and computational resource consumption.

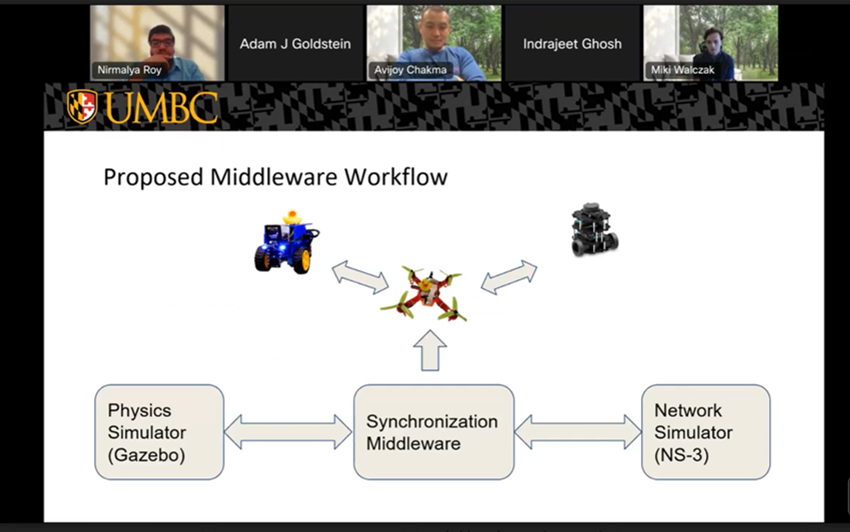

Synchronizing Middleware for a Heterogeneous Multi-Robot System With Low Latency Co-Simulation

REU student: Mikolaj Walczak

Mentors: Emon Dey, Mohammad Saeid Anwar, Dr. Nirmalya Roy

Abstract—In a heterogeneous multi-robot system, synchronization between each of the robots is one of the most difficult issues to address. We know that different clock, transmission and simulation speeds can cause such a system to become desynchronized. The system described in this paper will be deployed in battlefield scenarios where there is no room for error and risk of de-synchronization. To address this issue, we propose an extension of the synchronizing middle-ware, SynchroSim, to minimize these issues and optimize communication between robotic agents. Experiments for SynchroSim have been tested for co-simulation of physics simulator Gazebo and network simulator NS-3. With these simulations, we are able to transition our research toward real-life robotic agents and terrain. In order to test the middleware, we chose a variety of robots to promote potential synchronization issues with a demonstration containing three agents: a Duckiedrone and two slave nodes; Turtlebot3 burger and Duckiebot. The Duckiedrone will act as the master node and control communication between the two slave nodes. Currently, communication is managed by the Transmission Control Protocol (TCP). Though this protocol is reliable, it can use up a lot of bandwidth and time. To compensate for this, we have been researching the faster but less reliable protocol named User Datagram Protocol (UDP) and analyzing its efficacy in the multi-robot system. To evaluate the performance of our proposed middleware, we plan to employ two of the most important metrics for communication systems: average latency and percentage of packet loss. This research should encourage usage of low latency communication over reliable communication in multi robot systems.

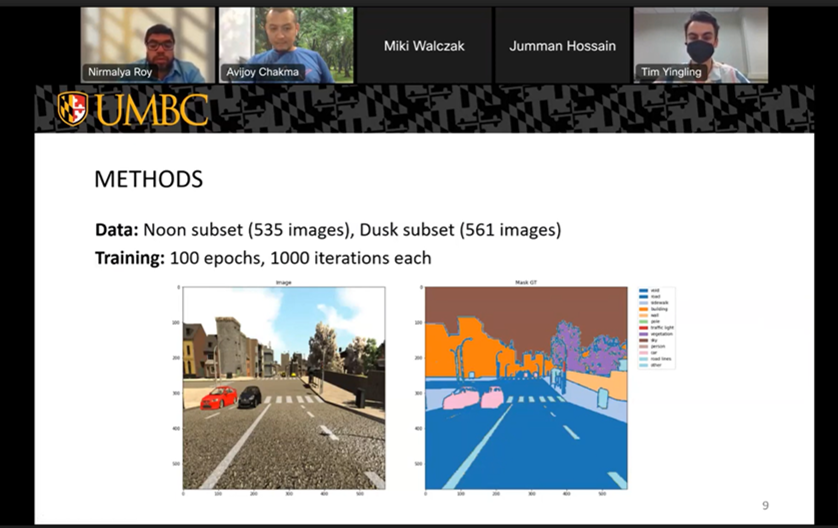

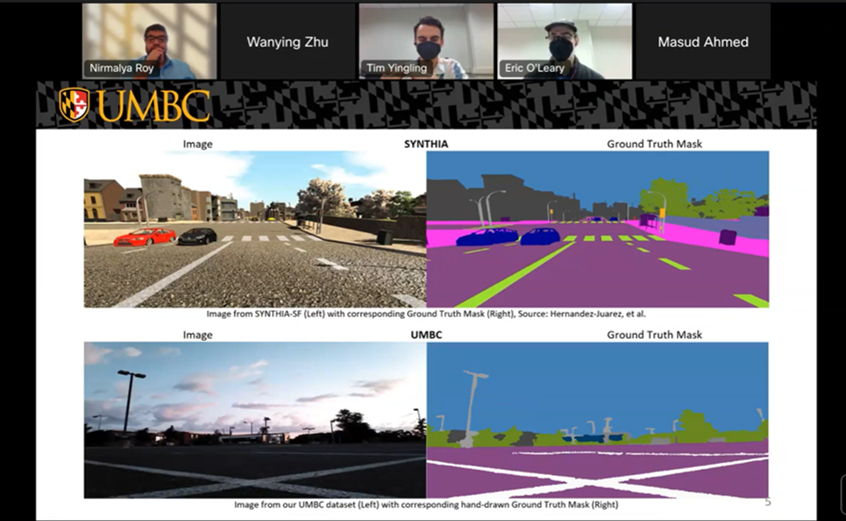

Altering Target Domain Images Across Different Lighting Conditions for Unsupervised Domain Adaptation

REU student: Tim Yingling

Mentors: Masud Ahmed, Dr. Nirmalya Roy

Abstract—Deep learning models for semantic segmentation have a hard time adapting to new environments other than what the model was trained on. The process of domain adaptation aims to fix this issue, however, as dissimilarities between source and target increase, the performance of the adaptive model tends to decrease. In this paper, we propose a model that aims to fix the issue of performance decrease from non-ideal lighting conditions where the target has higher or lower light intensity than the source. Our solution includes altering the contrast of the target images to resemble that of the source images so that classes are easier to identify. To perform experiments, we have collected our own dataset consisting of 1619 annotated low-angle images taken from a ROSbot 2.0 and a ROSbot 2.0 Pro in a suburban area at different times of day for our target, and we use the SYNTHIA-SF dataset as our source. Multiple DeepLabV3+ models using ResNet50 as a backbone are trained on noon and dusk subsets of the data, altering the target domain’s contrast value by a factor of 0.5 and 1.5. Performance was similar across the noon models as well as the dusk models, although the dusk models did perform worse than the noon models as expected. Slight variations can be observed at the class level, but there is no significant difference that was observed that allows us to conclude that altering the contrast of the target domain affected performance.

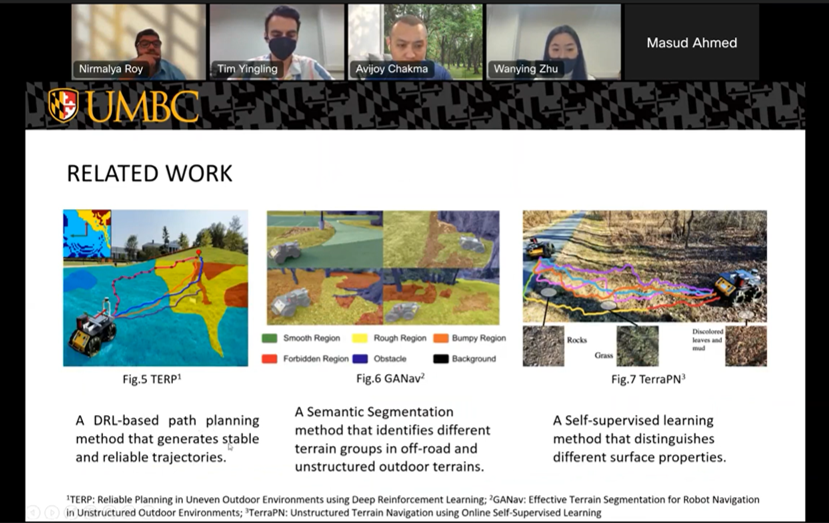

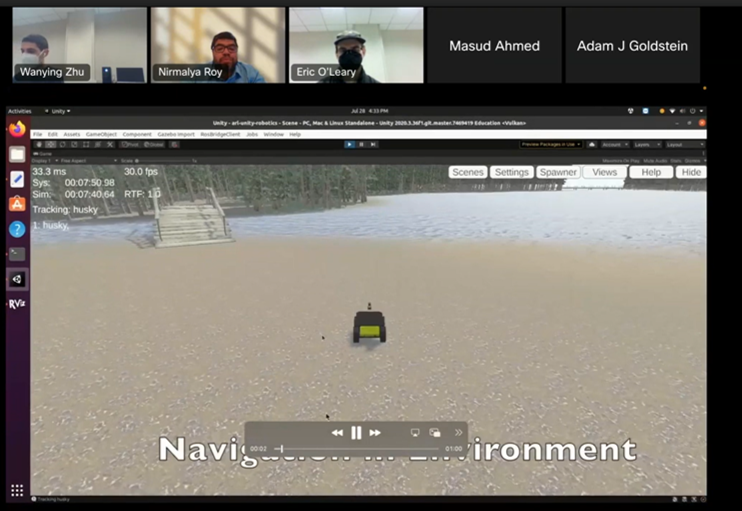

Maximum-cover following trajectory planning in unstructured outdoor environments with deep reinforcement learning

REU student: Wanying Zhu

Mentors: Jumman Hossain, Dr. Nirmalya Roy

Abstract— In a military combat context or in a contested environment, a robot sometimes needs to go undercover to prevent getting detected or attacked by adversarial agents. To move the robot with maximum cover, we present a novel Deep Reinforcement Learning (DRL) based method for identifying covert and navigable trajectories in off-road terrains and jungle environments. Our approach focuses on seeking shelters and covers while safely navigating to a predefined destination. Using an elevation map generated from 3D cloud points, robot pose, and goal information as input, our method computes a local cost map that distinguishes which path will grant the maximal covertness while maintaining a low-cost trajectory. The elevation level is another determining factor since the high ground is easily targetable. Besides, maintaining low elevation while traveling allows the robot to be less visible in the environment, as other objects become natural shelters by blocking the view. Our agent learns to go through low elevation via a reward function: we assign penalties when the robot gains elevation. With the assistance of another DRL-based navigation algorithm, this approach guarantees dynamically feasible velocities that match state-of-the-art performance. In addition, we evaluate our model on a Clearpath Husky robot in the ARL Unity simulation environment.

Robustness of Unsupervised Domain Adaptation against Adversarial Attacks and Blur

REU student: Eric O'Leary

Mentors: Masud Ahmed, Dr. Nirmalya Roy

Abstract—Domain adaptation has been widely used in semantic segmentation to allow deep learning models to be trained on datasets without labels. However, a largely unexplored field is the robustness of these models. In this paper, we introduce distortions from adversarial attacks and Gaussian blur to a target domain of real-world images to create a more robust model. Our source domains are SYNTHIA-SF and Cityscapes, and for our target domain, we collected and partially soft-labeled a dataset of seventeen sequences of images taken on a ROSBot 2.0 and a ROSBot 2.0 Pro around the UMBC campus at noon, sunset, and sunrise. Our models will be constructed using DeepLabV3Plus with a ResNet50 backbone modified for unsupervised domain adaptation using focal loss.

Simulated Forest Environment and Robot Control Framework for Integration with Tree Detection Algorithms

REU student: Avi Spector

Mentors: Jumman Hossain, Dr. Nirmalya Roy

Abstract—Simulated environments can be a quicker and more flexible alternative to training and testing machine learning models in the real world. Models also need to be able to efficiently communicate with the environment. In military-relevant environments, a trained model can play a valuable role in finding cover for an autonomous robot to prevent getting detected or attacked by adversaries. In this regard, we present a forest simulation and robot control framework that is ready for integration with machine learning algorithms. Our framework includes an environment relevant to military situations and is capable of providing information about the environment to a machine learning model. A forest environment was designed with wooded areas, open paths, water, and bridges. A Clearpath Husky robot is simulated in the environment using Army Research Laboratory’s (ARL) Unity and ROS simulation framework. The Husky robot is equipped with a camera and lidar sensor. Data from these sensors can be read through ROS topics and RViz configuration windows. The robot can be moved using ROS velocity command topics. These communication methods can be employed by a machine learning algorithm for use in detecting trees to attain maximum cover. Our designed environment improves upon the default ARL framework environments by offering a more diverse terrain and more opportunities for cover. This makes the environment more relevant to a cover-seeking machine learning model.

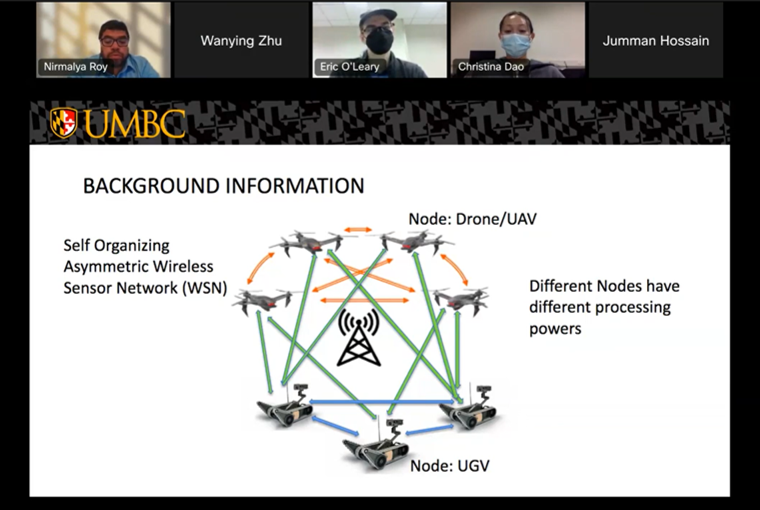

Self-Organization of Asymmetric Wireless Sensor Networks Enables Multiple Deep Learning Algorithms to Run Concurrently

REU student: Christina Dao

Mentors: Saeid Anwar, Sreenivasan Ramasamy Ramamurthy, Dr. Kasthuri Jayarajah, Dr. Nirmalya Roy

Abstract—Deep learning algorithms implemented within wireless sensor networks is a highly applicable method for data collection. In the military, situational awareness is very important. Unmanned ground and aerial vehicles can offer intelligence and surveillance when combined with deep learning applications. Utilization may be increased by deploying these UGVs and UAVs and allowing soldiers to be stationed elsewhere. Sensors placed on multiple UGVs and UAVs with fast and accurate data processing would be highly advantageous in the battlefield. We want to run several applications (such as object detection, image segmentation, and face recognition) concurrently on the devices in asymmetric, heterogeneous wireless sensor networks, however, the complexity of the network comes with the resource constraints. The different operations require various amounts of computational power and the many nodes have varying performance characteristics. The wireless sensor network must be self-organizing, where each node that collects data must be able to decide whether it is able to process the data or not. Additionally, nodes need to be able to initiate another course of action, such as transferring the data to be processed on a stronger node. We must consider what data types need to be sent, and to which device the data should be sent for processing. We study the system resources and process of each individual node of the network before running deep learning algorithms, as well as during. Analyzing latency, accuracy, energy, and other system processes allows us to configure the Network with the optimal organization.

A federated learning approach to camera-based contact-less heart rate monitoring

REU student: Shibi Ayyanar

Mentors: Zahid Hasan, Emon Dey, Dr. Nirmalya Roy

Abstract— rPPG (remote Photoplythysmograpy) data refers to remote contactless heart rate detection using regular video camera sensors. However, privacy concerns arise given the necessity of having multiple patient facial recordings in one location. We identify Federated Learning (FL) approach as a prospective solution to these privacy issues while improving model performance accuracy. Federated Learning is a machine learning model which addresses those concerns. The model has devices only send back updates to the model rather than data, and thus maintains user privacy. This combination of user privacy and functionality is why a federated setup is a promising potential solution to analyzing rPPG data. To the best of our knowledge, a federated learning approach to rPPG data as a potential means to maintain user healthcare privacy has not yet been explored. In this work, we explore a regression Federated Learning setup in the rPPG domain. Our primary goal is to create a comprehensive regression model in a federated setup. Unlike many existing federated learning frameworks, our application is regression based rather than classification based. We will have our centralized model initially train on a randomized selection of patient data. After a sufficient training point has passed, we will send weights of our rPPG model to different nodes (representing local servers) so as to train on the subject’s data on their local machine. The corresponding nodes will then send back the updated weights back to the centralized server, with the process repeating until a certain accuracy threshold is met. We also provide detailed analysis and perform ablation studies of the FL methods in the rPPG domain.

Object Detection Using Texas Instrument MMWave Radar

REU student: Marc Conn

Mentors: Maloy Kumar Devnath, Avijoy Chakma, Dr. Nirmalya Roy

Abstract—A short wavelength radar system is a method considered for surface object detection due to providing a non-destructive and non-invasive solution for inspection. Most of the previous work which we went through, did not consider the real environment. They had limitations like only working in a favorable setting: not working at night, a foggy environment, etc. The environment of the data collection takes place greatly impacts reflected waves from objects due to their unique physical and chemical properties. These attributes affect an object’s frequency response, which can in turn be used to differentiate objects. In order to monitor such behavior we will first try to integrate a mmWave radar in the TurtleBot3 for object detection. This system emits Frequency Modulated Continuous Waves (FMCWs) as a means of collecting information about the objects present in a scanned range to the UGV. A short wavelength radar’s performance is influenced by external factors, but that are not limited to a scanned object's permittivity and material composition, and the radar’s distance from a scanned object. This paper will note such limitations of the Texas Instruments AWR2944 Evaluation Model integrated into a Turtlebot3 Burger.

Deep Transfer Learning Framework For Audio Activity Recognition

REU student: Eduardo Santin

Mentors: Avijoy Chakma, Dr. Nirmalya Roy

Abstract—Opulence and incorporation of acoustic devices such as earables, and conversational assistants in our everyday life have boosted a way for audio activity recognition research. Similar to the ImageNet dataset, many large crowdsourced audio datasets have been curated. Often, these crowdsourced datasets are collected from different sources that contain data samples with background noise, music, and conversations that alter the generated waveform. We hypothesize that such heterogeneity in the data samples will cause a suboptimal performance when deployed in a transfer learning approach. We plan to incorporate wearable accelerometer data to overcome such drawbacks in audio activity classification tasks. In our study, we have collected an in-house dataset for the convenience of relating the time and frequency domain features of accelerometer and the audio data, respectively. We have considered smart-home environment activities that involve repetitive hand movement. We collected an in-house dataset from 10 participants using a wearable sensor called ‘eSense’ (a pair of earbuds with a microphone and a 6-axis imu sensor) and a Shimmer sensor device to collect the accelerometer data. In the experiment, we have used an acoustic model pre-trained with the AudioSet dataset and evaluated on our dataset. Finally, to boost the audio classification performance, we propose a deep multi-modal transfer learning framework that leverages the labeled accelerometer data and achieve a substantial performance gain.